Doomsday or business as usual? Artificial intelligence, machine learning, and CBRN threats

Dan Kaszeta

Chemical, biological, radiological, and nuclear (CBRN) threats are potential sources of disaster in modern life. Enemies might be able to use them for some sort of advantage, terrorists could use them for havoc, and accidents could cause disruption, property loss, and death. The same could be said of the broad field of artificial intelligence and machine learning. This expanding field has intersected that of CBRN in both practical and theoretical ways. There are areas for concern. But how concerned should we really be? And is often the case, concern can also bring opportunity. As this new aspect of modern life will be with us from now on, it is worth examining some of the more prominent ways in which AI and machine learning may penetrate in CBRN defence and security.

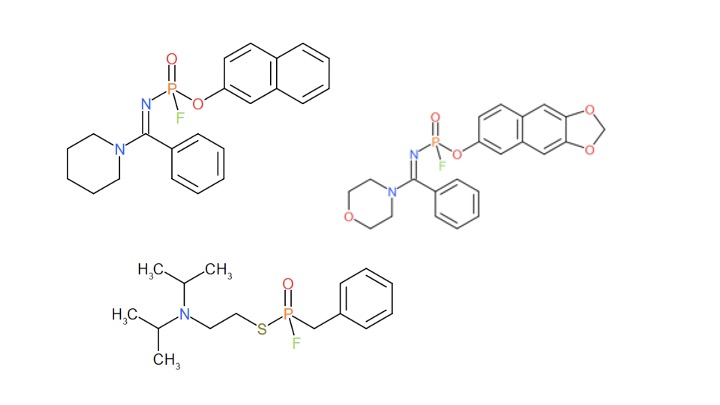

Artificial poisons?

AI could make new threat materials. Numerous accounts in both popular and specialist publications have talked about AI novel toxic compounds. Various experiments have set AI models loose to crease, on paper, new molecules. Within hours, AI models were creating molecules deemed to be poisonous. Some likely had toxicological properties that make them possible ‘nerve agents’ – a category of chemicals than includes some of the deadliest chemical warfare agents. A fair bit of alarm and hang-wringing accompanies some of these

A little bit of context is helpful in determining whether to get alarmed by this talk of synthesising chemicals. First, it is not necessary to use AI to do this. The rules for assembling plausible chemical compounds are relatively straightforward. A fairly simple bit of software code or a modestly trained undergraduate will spit out thousands of plausible structures for new chemical compounds. Moreover, even if you did it at random, of course you would get poisonous compounds. Anything you do to create new compounds will inevitably create poisons. Nearly every plausible chemical compound is likely to have toxicity at some level or concentration. Very few things are biologically inert. Even water is poisonous in high enough doses.

In reality, the AI algorithms that are alleged to be poison-generators are actually ‘everything generators’ because basically everything they make is a poison. Given the state of computational toxicology, just how poisonous a novel compound is, without test data, is not much better than guesswork. Some are just nonsense compounds, theoretically possible but impossible in real life. Promethium fluoride is plausible on paper, and almost certainly toxic. Yet with only 500-600 grams of promethium on the entire planet, dispersed in the Earth’s crust in vanishingly small traces, it is not really plausible. However, an AI tasked to find poisons may churn it out on the list.

Credit: Chem4Word

Another point is that that many compounds are possible medicines. The difference between something medicinal and something harmful is the dose. Some nerve agents are medicinal in fact, such as the drugs pyridostigmine and physostigmine. AI could discover medicines as well as poisons. Even optimising an AI to select for theoretical toxicity is not actually as relevant as one might think. Toxicity in poisons is basically an already solved problem. Ample poisons exist in nature and science. Yet many poisons are also medically useful, even extremely toxic compounds, if used in dilute form. We must recognise the complexity of modern chemistry and biology.

There are also some limiting factors. The size and complexity of molecules is also an important point. Since there are only so many types of atoms in the universe, (for instance – as defined by the periodic table of elements), and only so many ways in which you can stick them together, there’s only so much scope for what AI (or a simpler algorithm) can do. Simply stated, all of the small molecules are already spoken for. New compounds are likely to be large, unwieldy compounds that are solids at room temperature. Solids tend to make poor chemical warfare agents. Additionally, some that might be theoretically poisonous might also be practically insoluble, so would have a hard time being used as a poison. All told, diagram of a plausible molecule is a long way from manufacturing.

Given this author’s own experiments with AI, asking the natural follow-on questions of ‘So, AI, how do I actually make this molecule?’ and ‘What tools do I need?’ will get you some interesting but not terribly useful results. We should be right to also consider biology, but the nexus between AI and artificial design of biological materials is, hopefully, still some way off in practical term. AI at present will point you in the right direction to make some poisons – but so will a library card.

Industrial Hazards

Another area of concern is encroachment of AI onto industry. AI and various aspects of machine learning are in use in industry, and the extent to which this is already happening and what the level of potential threat may be is difficult to ascertain due to the opaque nature of many industrial processes. This area of concern impinges upon CBRN because of various industries that use CBRN materials. Is it possible that AI could cause a disaster with chemical or radiological materials? The difference between a CBRN terrorist incident and an industrial hazardous materials accident with toxic chemical lay in intent and mechanism, but not in the actual harm produced.

Credit: US Army/Sgt 1st Class Samantha M. Stryker

AI in industry, commerce, and transport is therefore a CBRN AI safety issue. Industrial processes could be overseen by various AI-type tools, and these processes could have accidents. Transportation networks could be overseen by AI and cause accidental releases. Nuclear power accidents could occur. For now, these hazards are fairly well circumscribed in industry. Regulators and standardisation bodies are active in this area. We are not a critical point right now, but continued vigilance is needed as AI and machine learning become more ubiquitous.

What is the feedstock of AI?

We are right to ask the question ‘how does this all work?’ A factor that needs to be strongly considered is that large language models are trying to cobble together answers to questions based on looking at the widest possible sets of data. In the case of CBRN weapons and technologies, where does the information come from? We should never assume that every bit of information that is relevant to an AI query – whether innocent or malevolent – is available for a model to search.

It may be best for to illustrate this with an example. You can ask an AI a query such as ‘give me a good recipe for a chocolate cheesecake’ and it will give you some results. It will look through thousands of cookbooks, web posts, articles, and possibly even YouTube videos and give you a result. However, not every chocolate cheesecake recipe in human history is available for searching. Some cookbooks may be old and in languages that the AI has trouble understanding. An AI might be clever enough to deal with a recipe that starts out ‘start like in the plain cheesecake recipe, but at step 4 add some cocoa powder.’ Yet what about other sources of information? How well does AI read odd fonts and typography in other alphabets? Or, say, a Czech recipe in a blurry scan of a newspaper article from 1935? So far, there will be a bias towards material that is written in English, or at least in easily-readable graphic form. But the AI will not have access to your grandmother’s hand-written recipe in a desk drawer. There will be many thousands of such recipes out there, unavailable for online search.

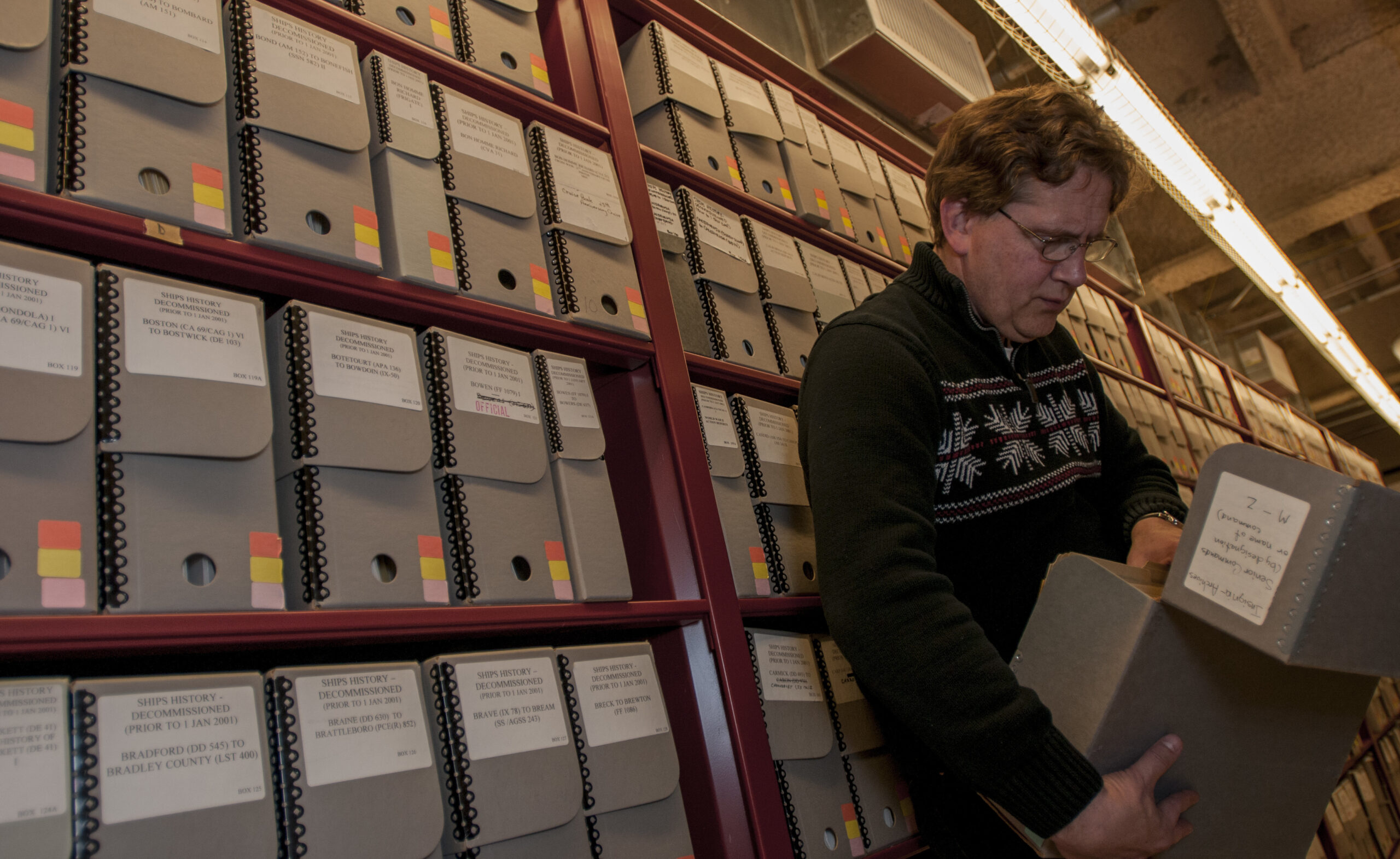

Credit: US Navy/Specialist 2nd Class Eric Lockwood

The reason why this allegorical example is relevant is that much, possibly even most, of the really useful information from the large state programmes that made CBRN weapons is not available online for AI tools to parse and digest. The good bits of these programmes are basically the equivalent of your grandmother’s chocolate cheesecake recipe. They exist, but they are in hard copy locked up in archives. They are not searchable or discoverable online. Some, such as notes from the original German scientists who invented nerve agents are available to keen researchers in an archive. However, they are not reproduced online and are handwritten, so even if they were available they might be a challenge to read. Others are deeply buried behind procedural and physical safeguards. AI simply does not have access to the archived or lost secrets of the old national production programmes.

Misinformation, disinformation, and propaganda

One problematic area in CBRN affairs in the current day is alleged or fake incidents. In conflicts in recent years, there have been numerous incidents that were either innocently or maliciously misrepresented as possible CBRN incidents. For people who wish to create mayhem, AI represents a serious threat, though. Misinformation is incorrect information spread unintentionally. Disinformation is false information that is spread maliciously. It can be difficult to tell the two apart, and incorrect information starting innocently out of lack of background knowledge can be deliberately spread farther. Likewise, disinformation started maliciously can be passed along by people who simply do not know any better.

We can look at recent conflicts and see numerous instances of misinformation and disinformation in the subject area. Incidents involving Sarin and Chlorine in Syria were variously denied, misrepresented, and misattributed. Tear gas and smoke grenades in several countries have been wrongly described as ‘nerve agents’ or ‘mustard gas’ during various incidents. The 4 August 2020 large fertiliser explosion in Beirut was alleged by some to be a nuclear explosion before the truth came to light. Similarly, in the Ukraine conflict, tear gas grenades have been misrepresented as the chemical chloropicrin, yellow- or orange-coloured explosions due to nitrate explosive material have been alleged to be chemical warfare agents, and very old chemical warfare test kits were passed off as samples of Sarin. A large hazardous materials accident in the US state of Ohio was widely but erroneously described on social media as emitting ‘mustard gas’. Hardly a day goes by in the current Israel-Gaza conflict without some kind of similar claim being made.

Credit: US Army/Sgt Benjamin Northcutt

For those malefactors who wish to conduct malicious information operations, various AI tools are already useful and will continue to improve. Dangerously, still, video, and audio evidence can be mocked up as completely faked evidence of some sort of CBRN incident. We’ve already had non-AI versions of this scam with images taken from other sources and misrepresented on social media. But reverse-image searches quickly debunk such recycling of images. Yet totally new images of deep-fake quality would be impervious to reverse-image searches.

Further AI techniques are similarly disturbing. We already know that AI tools can concoct fake laboratory data. A brief news item in the 22 November 2023 issue of Nature cites one such problematic incident. What if, during and after the Beirut explosion, AI tools invented radiation sensor readings? AI inventing fake article citations claiming that tear gas really is a nerve agent is certainly plausible. Fake patent citations and fake case law citations have been seen as AI outputs in the last year. We have long needed tools to help debunk disinformation. It now seems clear that we will have to develop tools to deduce the hidden hand of AI.

Opportunities: What can AI do to help?

Enough with the downsides. Are there positive uses for AI and machine learning with regards to CBRN threats? There certainly will be some areas in which these new technologies will have some application. One way to look at the issue is that AI and machine learning are merely another step, albeit a large one, in a continued improvement in computing capabilities. Computers and data processing are now integral to many CBRN defence tasks, so it stands to reason that those improvements in information technology, AI or otherwise, will improve aspects of CBRN operations as a matter of course.

The utility of general-purpose AI models such as Chat GPT in CBRN affairs is still a largely untested area. However, there is a reasonable track record wherein AI and machine learning tools are focused on specific narrow tasks. Three particular aspects of CBRN countermeasures seem to be natural areas wherein specialised AI tools may be helpful. The three aspects that come to mind are non-proliferation intelligence, hazard modelling, and networked detection.

Historically, countries have gone to lengths to hide the development, production, testing, and stockpiling of CBRN weapons. The question of whether or not a particular country has offensive CBRN programmes is often a hard one for intelligence services to analyse. Rarely is there a single piece of information that proves or disproves the existence of a programme. Analysts examine lots of small pieces of information to try to put together a jigsaw puzzle. The types of information that might be useful for finding a covert CBRN programme are quite varied. Only the largest and best-resourced intelligence services are likely to have the resources to do a thorough effort of collection and analysis.

Credit: US Army/Sgt Benjamin Northcutt

However, you could train an AI tool to do some of this work. AI tools could sift through lots of raw data looking for clues and do it in ways that are faster than human analysts. Could an AI look at thousands of small clues and come up with useful intelligence in ways that a human analyst might not? How long might it take for human analysts to sift through the CVs and publication record of thousands of scientists to look for trends? Some phenomenon such as ten organophosphate specialists disappearing out of social networks and ceasing publishing might be of interest if Country X is suspected of making nerve agents. Perhaps tasks such as these are already being done, but this correspondent has no direct knowledge of such, of course.

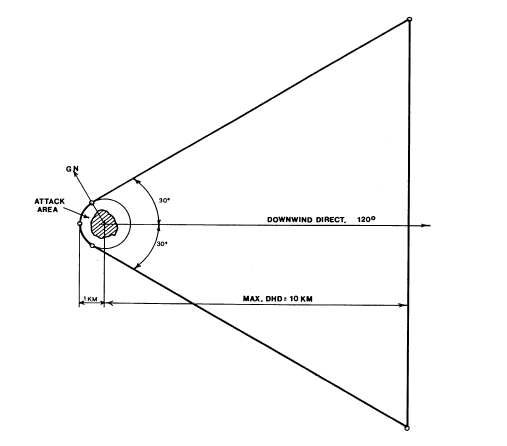

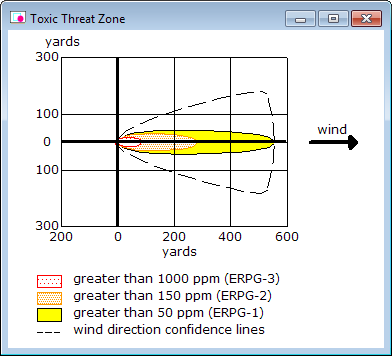

‘Hazard prediction’ in CBRN is basically a term for ‘if such and such a CBRN material is released, where will it travel?’ In older times, and in some settings, very simplistic models and templates are used to plot simple triangles on a map. This correspondent remembers doing some in the dark with a red-lens flashlight on a NATO 1:50000 map in his early training. Increasingly sophisticated computer models have supplanted but not completely replaced antiquated analogue methods. Larger models take a lot of input and do a lot of calculations. The largest hazard prediction capabilities, such as NARAC at the USA’s Livermore National Laboratory use supercomputers and a large number of PhD-level staff. Identifying all of the relevant inputs, searching for data, and crunching the numbers to produce a relevant and accurate hazard prediction seems a task well suited for AI and machine learning. At a minimum, an AI model could serve as the front-end and feed useful inputs into an existing hazard prediction model. A well-crafted AI tool could very quickly interrogate thousands of useful datapoints, such as meteorological sensors, across an area of interest.

Half a century ago, CBRN detection was usually a handful of expensive sophisticate devices manually operated by highly trained CBRN specialists. In the modern era, vastly improved technologies, miniaturisation of electronics, and digital communications, there is not any technical impediment to proliferating thousands of CBRN sensors all over the modern battlefield or a city. Managing, meaningfully interpreting, and integrating all of this data with other forms of information, however, is a task that still befuddles CBRN specialists. A narrow-scoped AI that tends a sensor network can likely weed out false alarms and provide near real-time situational awareness in both military and civil protection environments. By looking at a lot of different types of sensor outputs, and not all of them necessarily specific CBRN sensors (i.e., temperature sensors, wind direction sensors), there is the prospect of a much more accurate alert. Combined with the hazard prediction capability described above, an alert can be confined strictly to the area where it is needed, thus reducing impact on military missions.

Credit: US Government

Safeguarding the future

Many people are legitimately concerned about what threats AI and related technologies may bring. Some have even voiced existential fears. Because of the nature of CBRN threats, combining these threats in people’s minds has caused some degree of anxiety. What can be done? Are we actually right to worry?

To a certain extent, this author believes that worries are slightly overblown. AI does not fundamentally alter the calculus that CBRN warfare is inherently more expensive, less reliable, more dependent on weather variables, and often less effective than sophisticated conventional means of lethality. Explosives and firearms are still going to be far easier for terrorist groups than nerve agents, despite whatever information a chat with an AI might provide. Also, historically, the defensive arms race in CBRN has been more fruitful than the offensive one. Defensive measures, which have continued for decades long after meaningful technical developments ceased, have more than matched most CBRN threats. This basic fact will not change.

For the most part, CBRN hazards lay within a much broader panoply of potential AI hazards. Broader safeguards are likely to have a direct benefit in CBRN affairs. Creators of AI tools must be responsible for helping to make them safe. Governments, standards bodies, and regulators of every type can develop and advocate for codes of conduct and good working practices, as well as laying down a regulatory framework. As with cars, aircraft, medicines, and other technological aspects of modern life, safety needs to be actively promoted and guided. It is what taxpayers want and demand.

We have long agreed that cars need crash safety standards and seatbelts. However the problem with AI is that few of us know what these standards and seatbelts look like. Society and industry need to develop them. Within CBRN defence, some early promise has been shown by red-teaming efforts. These have involved experts deliberately poking holes and exploiting an AI to see the extent of possible impact, and can help in the development of safeguarding policies. AI creators big and small need to develop the ‘seatbelts’ that make their products safe and limit their ability to cause harm in CBRN or other areas. The problems posed by possible disinformation could be mitigated by ‘watermarking’ – the idea that creative output by AI systems is cleverly marked so that it can be seen by all as artificial output.

Dan Kaszeta