Artificial intelligence (AI) and machine learning (ML) technologies are making their presence felt in the signals intelligence world, but as much as these approaches herald benefits, they may also carry inherent risks.

Computing giant IBM defines AI as “technology that enables computers and machines to simulate human intelligence and problem solving.” In short, computers and machines which behave in a similar way to the human brain. AI and the term ML are often used interchangeably, but this can be erroneous. IBM defines ML as a branch of AI that uses “data and algorithms to enable AI to imitate the way that humans learn, gradually improving its accuracy.”

To return to the brain analogy, your mind can be considered a blank canvas when you are born. You arrive in this world hard-wired with some knowledge such as how to eat and breathe, but not how to speak classical Arabic. Skills and behaviours like the latter must be learned. The learning process involves collecting data. We do this all the time without even realising it. Returning to the classical Arabic analogy, if you are learning the language, your teacher is sharing data with you in the form of information that will enable this. The prolonged organisation of this data will enable you to learn the language. ML is the process of collecting and organising the data, while AI is the way your brain then uses this information to know and use classical Arabic.

The human brain is ideally designed for the crazily complicated world in which we live. Every day we make thousands of decisions all based on our life experience and the knowledge we have acquired to date. We even make predictions based on our experiences in the past. We know not to cross a busy road as we have learned that fast traffic can kill and injure, and that there is a high probability this could happen to us if we cross the road now. We avoid taking the bus during rush hour if we can because the past has taught us that it is likely to be fully packed. It therefore makes sense that we are increasingly using AI and ML in computing to help make sense of complexity.

Radio soup

Increasing complexity is a hallmark of the radio spectrum, the part of the electromagnetic spectrum stretching from 3 kHz up to 300 GHz. Within this waveband radars, radios and satellite navigation systems do their work, with militaries depending extensively on all three capabilities. The problem with the radio spectrum is that militaries are not the only users. Civilian cellphone networks depend on radio frequencies, as do radio and television broadcasting. Likewise civilian marine, coastal, aircraft and air traffic control radars all rely on the radio spectrum. This is just a small selection of global radio spectrum users. There are many more which can be as diverse as medical imaging and radio astronomy. Existing and growing radio spectrum congestion is illustrated by the ever-growing number of wireless device users globally.

Credit: I’MTech

Ericsson produces highly respected analysis of global wireless device use. In late 2023 the company’s mobile subscriber outlook said that 1.4 billion subscribers globally were using fifth-generation (5G) cellular protocols. These new cellphone and wireless device protocols promise a step change in to the quantities of data, and the number of subscribers, that can be hosted on individual networks. An analysis drafted by Thales stated that 5G networks could offer data rates exceeding 10 Gbps, which according to the company is up to 100 times faster than the data rates achievable with fourth generation (4G) protocols. More 5G devices can be hosted on a single network node than is possible with 4G, Thales continued. A node could include an individual cellphone tower potentially connecting one million devices per square kilometre (0.4 square miles).

Help is at hand

The rolling out of 5G is good news for the cellphone user but worrisome for militaries, particularly for Signals Intelligence (SIGINT) professionals. As the spectrum gets increasingly congested, how will SIGINT cadres detect, locate and identify a signal of interest in this electromagnetic swamp? Military radars and radios are purposefully designed to transmit discreet signals which may change frequencies thousands of times per second. The point of such behaviours is precisely to frustrate attempts to detect these signals in the first place. Detect, locate and identify a radar and you probably detect, locate and identify the aircraft, ship or missile battery it equips. The same is true for any radio. It is even possible for SIGINT cadres to detect and locate individual troops based on their radio signals, along with vehicles and bases. Nonetheless, the challenge remains finding the signal in the first place. This could get harder than ever thanks to innovations in signal discretion. An increasingly congested spectrum will not help.

To exacerbate matters, signal detection can be time sensitive. For example, perhaps an enemy warship is illuminating your own, or an allied, vessel to get ready to launch an anti-ship missile. Alternatively, has the missile activated its radar for a brief period to check the ship’s radar cross section (RCS) with the RCS the radar has stored in its memory? Processes like these are used by radars to confirm their target. In short, how can a signal be detected, located, identified and, if necessary, tracked in a timely manner in a congested environment? Do AI and ML have roles to play in helping to address these vexing challenges?

The first step is to detect the signal. An electronic support measure (ESM) continually monitors a segment of the radio spectrum to spot signals of interest. Returning to our above warship analogy, perhaps the ESM is monitoring the ship’s locale for X-band (8.5 GHz to 10.68 GHz) radar signals. X-band is a popular choice for naval fire control radars, as these frequencies can render a target in impressive detail. If the ESM is monitoring the entire 8.5 GHz to 10.68 GHz waveband it may capture many X-band signals that are not of interest. For example, the ESM might detect friendly X-band fire control radar signals, or X-band signals being used for satellite communications. A skilled electronic intelligence (ELINT) operative can recognise the signals of interest found by the ESM. Nonetheless, as the spectrum gets more congested, this process could get more challenging. This is not to suggest that we should be taking the human out of the loop, but AI and ML have an important supporting role to play.

Credit: BAE Systems

A written statement provided to this author by ELT Group said that “AI and ML algorithms can be trained to autonomously detect signals within the raw data (coming into the ESM). This can help in identifying relevant communications signals amidst large volumes of noise.” Performing this identification will see the use of ML algorithms that “can classify signals based on various parameters such as frequency modulation and waveform characteristics.” In essence, it is not just a case of detecting the signals, but also understanding their composition. This can be challenging.

Detecting unusual behaviour is something else that AI and ML could assist with. Perhaps an unusual signal has appeared within the raw ELINT data the ESM is collecting. It may not be immediately obvious that the signal is unusual, but AI techniques could help “identify unusual patterns or behaviours in the signal data, which may indicate potential threats or interesting events”, according to ELT Group’s statement. From a communications intelligence perspective, these techniques could help to identify radio networks connecting a battalion or brigade.

With such techniques, not only is the ESM using ML and AI to tease out signals from the noise, but the system is recognising several similar signals across a defined area. Has the electronic support measure in fact detected several tactical communications nets and the radios that are hosting these? ML algorithms and the AI they serve are continually collecting data. As in our above example showing how our own brain learns and teaches us not to cross a busy road of fast traffic, so AI learns from past experiences. Detecting that an enemy X-band radar uses a certain frequency can be used by the ESM to alert us to every time this is detected. Although this is a simplistic example, AI offers the potential to provide highly complex predictions concerning potential future events by observing the radio spectrum.

Beware of panaceas

It may seem from this article that the adoption of AI and ML as part of the SIGINT practitioner’s toolbox is some years in the future, but this is not the case. The technologies are being adopted to this end: “AI and ML approaches are already being used in various capacities within the field of SIGINT,” said ELT Group’s statement, adding “although the extent of their implementation may vary across different agencies and organisations.” The biggest uptake may be in the civilian SIGINT gathering domain, where investment is flowing. AI and ML is helping to enhance processing, analysis and data management. Domestic intelligence and law enforcement agencies may have to sift through millions of cellphone records to monitor known or suspected spies, criminals and insurgents. Furthermore, this must be done day in, day out. As such, it makes sense to adopt any technologies which can help ease this burden.

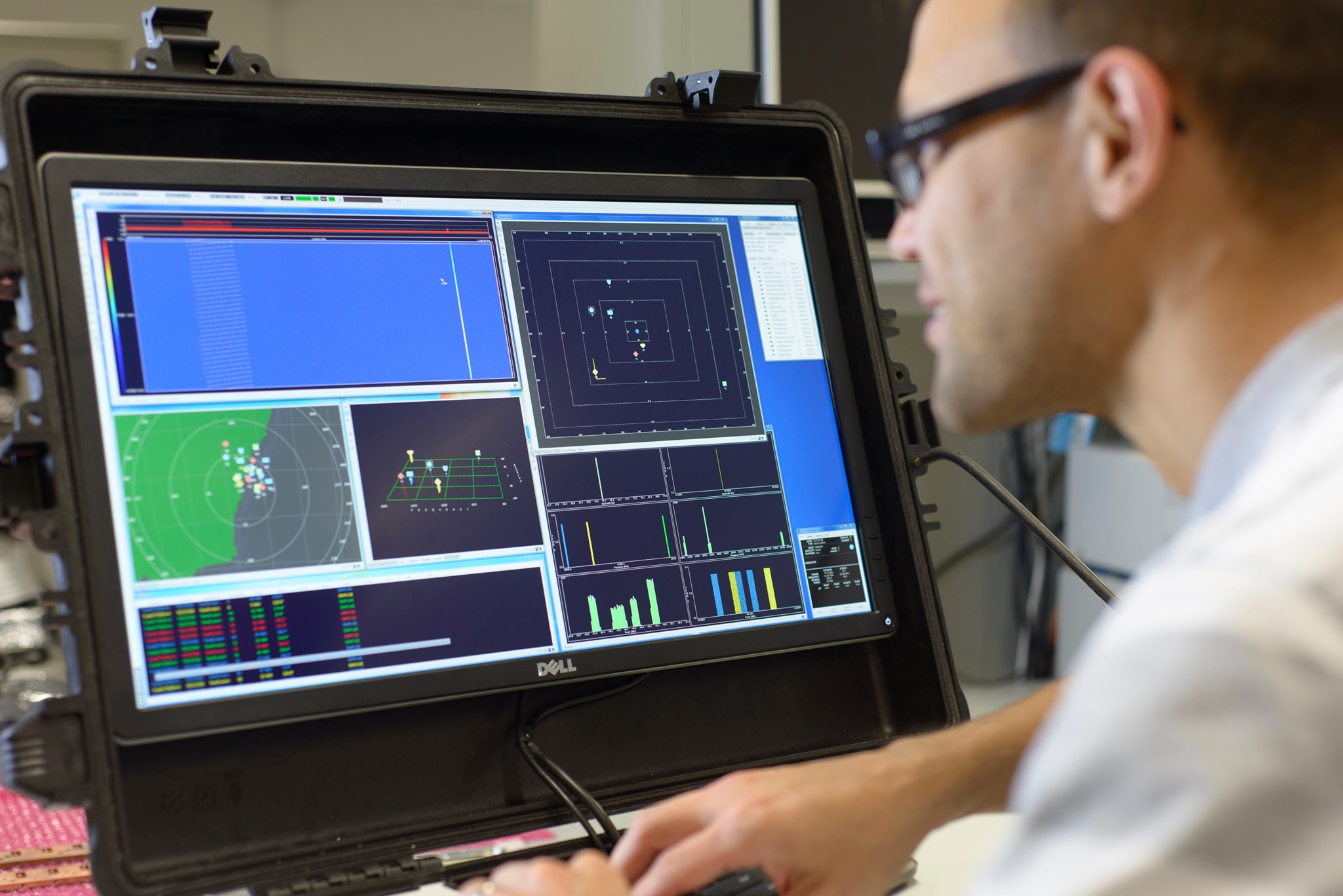

Credit: US Army

Nonetheless, embracing such technologies must always be balanced with privacy concerns: “There could be a risk that sensitive information may be improperly accessed or used, infringing on individuals’ privacy rights,” warned ELT Group. Concerns over privacy dovetail with other ethical worries: “(T)ransparency, accountability, and adherence to international laws and conventions governing surveillance activities,” are all valid concerns, the statement noted.

As this discussion regarding privacy concerns shows, it may seem that AI and ML offer untold gifts to the SIGINT practitioner, but the adoption of both is not without challenges and potential pitfalls. First and foremost, any AI is only as good as the data the ML algorithms collect and process. Signals of interest in the military domain may be few and far between. After all, the very signals that are being targeted are optimised to be hard to find. As much as AI is tackling the vexing task of SIGINT collection, interpretation and management in a contested and congested spectrum, so these approaches will no doubt be exploited to improve signal discretion.

It is imperative to avoid introducing bias into AI and ML algorithms which could negatively affect how data is interpreted. If for instance you were to only load the computer of an insurance company with details of red cars that are stolen, the algorithms may deduce that blue and yellow cars are safe from criminals. In the SIGINT context “biased algorithms may result in incorrect classifications or interpretations of intercepted communications, leading to erroneous conclusions or actions,” according to ELT Group’s statement. Bias could be exacerbated because of incomplete data.

Dr. Sue Robertson, an EW expert and director of EW Defence, warns that ELINT data can often be incomplete: “You never collect enough of a signal with an ESM to make a proper characterisation,” she warns. “You may be trying to detect and identify one signal in an environment where you have a myriad of signals with overlapping characteristics.” If you are collecting ELINT from a busy stretch of water, your ESM maybe capturing millions of individual maritime and naval radar pulses. 99.9% of these may be irrelevant. That still leaves 1,000 pulses that may be relevant and perhaps only a handful of these relate to the vessel which interests you.

As Robertson cautioned, AI software will only ever be as good as the data it is programmed with, and this can create problems if that data is unreliable. These realities raise the question of how AI and ML approaches are to interpret the ELINT they receive if that data is often incomplete. Another concern is fallibility. Humans can learn from their errors if they choose to. Is this the case for the AI and ML algorithms? “Machine learning has to be able to learn from its mistakes,” Roberston emphasised.

Credit: US DoD

The adoption of these technologies will not see the human removed from the collection, interpretation and dissemination process intrinsic to all forms of intelligence gathering. The goal of AI and ML is to make the human’s life easier, not to replace them. There are ethical questions surrounding the removal of the person from this process, assuming that this is desirable or even possible. As ELT Group’s statement noted, “the need for human oversight to ensure the accuracy and reliability of analysis” must remain paramount. Equally important is the need to protect AI and ML enabled systems from cyberattack. “Storing and processing large volumes of sensitive SIGINT data using AI and ML systems could present cybersecurity risks,” the company warned, adding, “Data breaches, unauthorised access and insider threats” must all be considered, and noting, “Protecting classified intelligence data from exploitation or compromise is crucial to maintaining national security.”

Ignoring or downplaying concerns risks disaster, according to ELT Group: “Misinterpretation or the misapplication of intelligence derived from AI and ML could potentially escalate conflicts or lead to unintended consequences. Inaccurate assessments of threats or hostile activities may prompt disproportionate responses, exacerbating tensions or triggering military actions.” Wars have started because of the dubious interpretation of dubious intelligence, as with the US-led war in Iraq which began in 2003. The adoption of AI and ML techniques in signals intelligence work should be done to lower such risks, not increase them.

How can these dangers be reduced? A multilayered approach shows promise: “Addressing these risks requires careful consideration of ethical, legal, and technical frameworks governing the use of AI and ML in SIGINT operations,” ELT Group emphasises. “Robust oversight mechanisms, transparency in algorithmic decision-making, and adherence to privacy and civil liberties protections are essential to mitigate potential harms and ensure responsible use of these technologies in intelligence activities.” Ultimately, as Dr. Robertson observed, “when you boil it all down, AI may be able to do something much faster than a human can work, but not necessarily more accurately.”

Thomas Withington