Displays are critical to modern military electronic systems, visualising sensor data, communications, and system status. As the most critical link between the human and machine, displays must be carefully integrated into the overall system architecture to enable reliable and effective presentation of information to operators.

A key consideration is the need to balance the interface between the electronic systems providing the information, the human-machine interfaces, and the physical, electromagnetic, and prevailing environmental conditions. This integration is critical for the efficient use of the system and mission success.

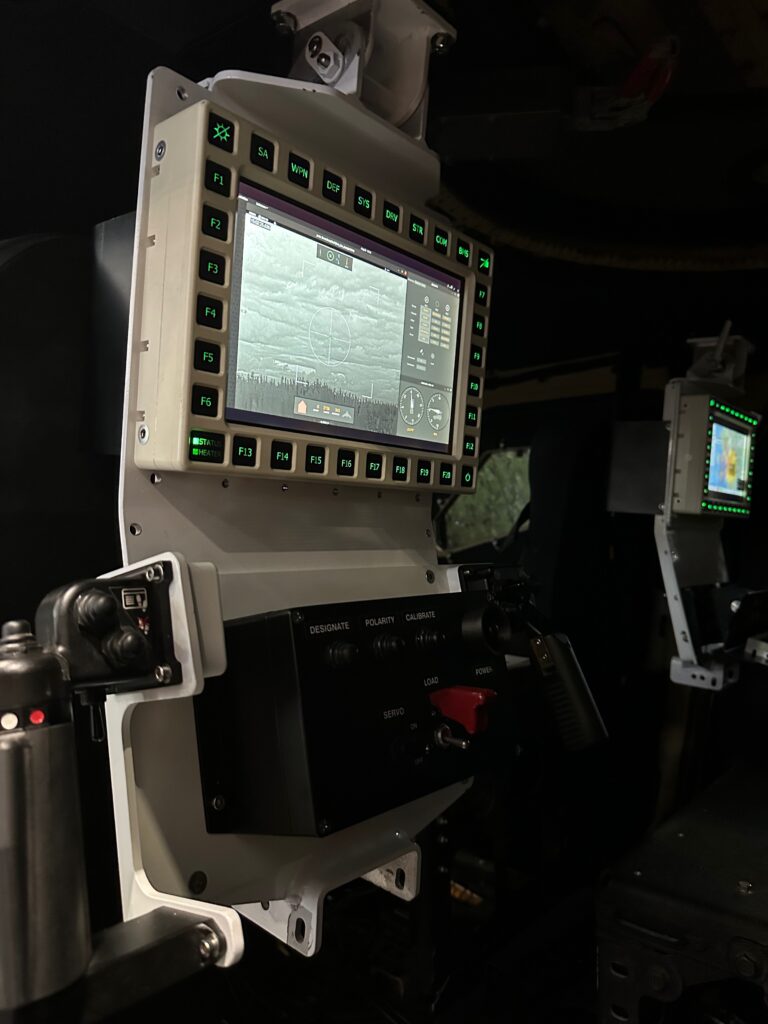

Built for the Military Environment

Unlike commercial electronics, military displays often operate in groups, tapping multiple video and data sources, fed to several operators on board and remotely from the operating platform. Modern open system frameworks such as the US Air Forces’ Future Airborne Capability Environment (FACE) and Sensor Open Systems Architecture (SOSA), the US Army’s Vehicle Integration for C4ISR/EW Interoperability (VICTORY) initiative, and the United Kingdom’s Ministry of Defence (MOD) Generic Vehicle Architecture (GVA) are paving the way for the standardisation of vehicle electronic architectures, based on common sets of physical specifications, and electronic data bus, network adaptors, connectors, and protocols.

Credit: Tamir Eshel

For the application of video distribution from multiple sensors to many displays, these architectures require video distribution units, and switches, supporting digital and analogue data, enabling operators to view multiple video sources simultaneously with minimal latency to make the most efficient use of the information gathered by the system. Many applications also require all information to be recorded or transmitted to other users.

Simple display output typically mirrors the images shown on the operator’s screen, thereby limiting the recorded and downlinked imagery to only the operator’s view.

For these purposes, displays are optimised for the task at hand. Some are designed for minimal space weight and power (SWaP), to serve as an endpoint for an integrated system. Unlike many of today’s short-lived commercial electronics, such systems must remain operational for 10–20 years. Other, more sophisticated ‘smart displays’ represent more expensive and capable systems, integrating an advanced computer, graphics processor, and communications protocols, enabling the display to assume the capabilities of an entire system. Such an architecture is especially useful in the upgrading of legacy platforms, where the installation of an integrated system of systems would be too costly. However, the useful lifespan of such systems is limited as they require frequent upgrades to remain up to date with the rapid advance of electronic systems.

Military devices differ from commercial equipment as they are designed to withstand the high loads encountered in an aerospace environment or built as rugged systems that withstand shock, vibration, and temperature extremes of field use in combat vehicles. These standards also specify how they are sealed and protected against damage from sand, water, and salt. Displays are also designed to be visible even in bright sunlight and avoid interference to users operating night vision imaging systems (NVIS). It should also prevent electromagnetic interference (EMI) and information leakage to nearby equipment.

Virtual and Mixed Reality

Military displays come in all different sizes, form factors, and resolutions. From large-area displays (LAD) with touchscreen and dozens of pushbuttons integrated into the bezel, to micro-displays embedded in helmets, sights, optical devices, and wearable systems.

Other devices project images on opaque or transparent visors embedded in binoculars, weapon sights, and head-mounted systems to provide immersive sensing to pilots and warfighters. The latest trend in this field is the mixed reality display, enabling unmanned aerial vehicle (UAV) operators to fly their drones in FPV as if seated inside them, operated through virtual reality (VR) goggles, and controlled by joysticks or hand controllers. Over the past year, this concept has proliferated a new category of loitering munitions known as ‘FPV drones’, widely used by both sides in Ukraine. These low-cost loitering munitions are often built in informal production workshops by volunteers, and typically used to engage land platforms from a safe distance. In combat vehicles, electronic displays provide the situational awareness critical for the crew to assess and react to external factors. Emerging applications for armoured vehicles include ‘see-through armour’, which utilises either wearable goggle-type displays, or flat displays embedded in windows, doors, and walls, providing the crew with ‘smart windows’ to the outside world.

For mission planning and rehearsal, VR headsets immerse users in synthetic environments, facilitating familiarisation with operational areas and scenarios in 3D. Advanced VR simulations integrate directional audio, touch feedback, and motion platforms to heighten realism. Integrated AR/VR training shortens learning curves and improves mission readiness. Ongoing optronics research is maturing additional display technologies for military adoption.

Credit: Tamir Eshel

ntelligence analysts and system operators use workstations comprised of multiple displays, some geared to provide three-dimensional imaging, to monitor many data sources simultaneously, and with the aid of artificial intelligence (AI), can tap into data obtained by high-resolution cameras to display the most operationally-useful information in a given scenario.

Optimising Usability with Human-Machine Interfaces

The human-machine interface (HMI) connecting the operator to a display system is as important as the underlying display technology itself. The HMI encompasses both how information is visually presented and how the user physically interacts with this information. Intuitive HMIs tailored for specific platforms and mission roles are crucial for enabling full display utility.

Modern aircraft leverage glass cockpit HMIs fusing flight instrumentation including altitude, airspeed, engine status, situational awareness, and systems statuses into reconfigurable multi-purpose flat panel displays via layered synthetic graphics generation. In these cases, digital screens are fitted with a touch control, and push buttons on the bezel to enable effective operation with gloves. Another method of interaction is gaze-tracking which enables intuitive cueing of systems activated by user eye movements to dynamically overlay data or target designations based on viewing direction.

To further expand display interfaces, gesture inputs allow intuitive commands such as swiping to pan/zoom high-resolution camera feeds. Voice recognition enables the hands-free activation of display modes and menus. Motion inputs from helmet trackers dynamically orient visualisation perspectives based on operator head positioning. Haptics and force feedback further heighten user engagement.

By combining interactive multi-layered graphics and three-dimensional presentation, diverse sensor modes, and adaptive interfaces, advanced display HMI enables a more immersive and intuitive experience for operators. This allows for optimising cognitive focus and interaction efficiencies across diverse mission sets ranging including drone control, intelligence gathering, and precision targeting.

Newer systems such as the F-35 helmet incorporate spherical visor projections for full immersion in a synthetic visual environment, including visuals below and around the aircraft. Crew members of combat vehicles can also use augmented reality systems to view the outside world when protected inside armoured vehicles. Their helmet visor taps peripheral cameras to create a panoramic view, overlaying threats, navigation references, and mission updates. Such displays are also used to improve driver orientation and situational awareness.

Lightweight head-worn displays resembling eyeglasses are being widely adopted for hands-free data visualisation in the field. Remote mentorship systems use headsets to share leader viewpoints, annotations, and guidance with distributed teams, enable commanders to share a situational view from different locations, as well as allow medics utilising wearables to access self-guided remote treatment resources while keeping their hands free for patient care.

Specialised Displays Interpret Advanced Sensor Feeds

Cutting-edge sensors enable battlefield observation across the electromagnetic spectrum far beyond natural human vision. However, interpreting non-visual spectral sensor feeds requires specialised display processing and symbology. With adequate training in the underlying physics and proper interpretation of display symbology, operators can learn to ’see’ through such sensors’ eyes, gaining a critical battlefield advantage.

For example, sonar systems transduce underwater acoustic reflections onto visible hydrographic mapping displays. However, effective interpretation relies on understanding the fundamentals of active versus passive sonar, and sound wave propagation. Similarly, radar fuses radio or microwave frequency reflections into an aerial picture, the processing of Doppler effects, moving target indication, and synthetic aperture radar all add layers of information that require specific presentation to the user. Thermal sensors that register heat differentials present an image-like view by using false-colour or more commonly grayscale gradients to reveal the thermal signatures of objects and targets. Digital imaging and displays enable combatants to leverage many of these sensors without the technical skills and extensive training required to operate legacy analogue systems.

Powerful Processing for Advanced Displays

The digital revolution has exponentially multiplied the sensor data available for aggregation and display. However, the human mental bandwidth remains constrained in how much information can consciously be evaluated simultaneously before reaching cognitive overload. Therefore, advanced processing is essential to synthesise only operationally-relevant information from endless sensor data flows. For this purpose, virtual 3D displays become essential viewing tools to present multi-layered information in augmented or mixed-reality.

Credit: Tamir Eshel

Enhanced by AI-enabled analytics, modern systems are designed to focus on highlights, fuse complementary feeds, and extract tactical meaning from various signals. Machine learning algorithms can be trained to emulate human-like pattern recognition capabilities tailored for battlefield contexts. By undertaking the tedious processing and analysis of sensor outputs, AI can relieve the cognitive burden on operators, allowing them to focus human efforts on higher-level insight and decision-making. Processing capability has become equally important as display technology for converting sensor inflows into timely and actionable battlefield awareness.

The Big Picture

Digital displays are fundamentally transforming military command and control and situational awareness capabilities. Integrating high-performance visualisation into diverse manned and unmanned systems and platforms requires holistic optimisation of system interfaces, ergonomics, HMIs, and processing support. Wearable displays bring mission-critical data to operators across air, ground, and naval roles. As advanced multi-spectral sensors synergistically expand the observable spectrum, leveraging AI to distil operational knowledge from endless sensor feeds, display technologies provide the ultimate conduit for human battlefield aware-ness.

Tamir Eshel